TLDR: Google’s Veo 2, a cutting-edge AI video generation model by DeepMind, delivers 4K realism, precise camera control, and unmatched prompt adherence. Outperforming competitors like OpenAI’s Sora, it aims to democratize video creation while ensuring responsible use through watermarking and content filters. Currently accessible via VideoFX, Veo 2 is set to transform video production for creators worldwide.

In a world increasingly dominated by visual content, AI video generation has emerged as a game-changer. Google’s DeepMind, a pioneer in artificial intelligence, is pushing the boundaries of this technology with its latest offering: Veo 2. This cutting-edge AI model is poised to revolutionize how we create videos, enabling users to transform their ideas into stunning visual realities.

Unveiling the Power of Veo 2

Veo 2 is not just an incremental upgrade; it represents a significant leap forward in AI video generation technology. Here are some of its key features that set it apart:

- Exceptional Visual Quality: Veo 2 generates videos with resolutions up to 4K and durations of minutes, exceeding the capabilities of its competitors.

- Unparalleled Realism: The model’s enhanced understanding of real-world physics and human movement leads to remarkably realistic videos. This means smoother motion, more natural interactions between objects, and a significant reduction in artifacts that often plague AI-generated content.

- Precise Camera Control: Veo 2 offers filmmakers and creators granular control over their videos. Users can specify:

- Lens types (e.g., 18mm for wide-angle shots)

- Camera angles

- Cinematic effects (e.g., shallow depth of field)

- Prompt Adherence: Veo 2 demonstrates exceptional accuracy in following user prompts, ensuring that the generated videos closely match the desired vision.

Accessing Veo 2 and Exploring Its Potential

While Veo 2 is not yet publicly available, Google is gradually granting access through its experimental platform, VideoFX. Currently, access is restricted to U.S. users over 18 through a waitlist. However, Google plans to make Veo 2 available on platforms such as YouTube Shorts and Vertex AI, expanding its reach to a wider audience.

The Importance of Responsible AI Development

As AI video generation technology advances, it’s crucial to address concerns about potential misuse. Google is taking proactive steps to ensure responsible use of Veo 2. These measures include:

- SynthID Watermarking: Veo 2 incorporates SynthID, an invisible watermarking technology, to clearly identify AI-generated videos. This is a vital step in combating the spread of misinformation and deepfakes.

- Prompt-Level Filters: Google employs prompt-level filters to prevent Veo 2 from generating harmful or inappropriate content. This helps safeguard against the model being used for malicious purposes.

Veo 2 vs. Sora

Veo 2 has already made waves in the AI video generation landscape, particularly in comparison to OpenAI’s Sora. To learn more about OpenAI’s Sora you can read the article about Sora.

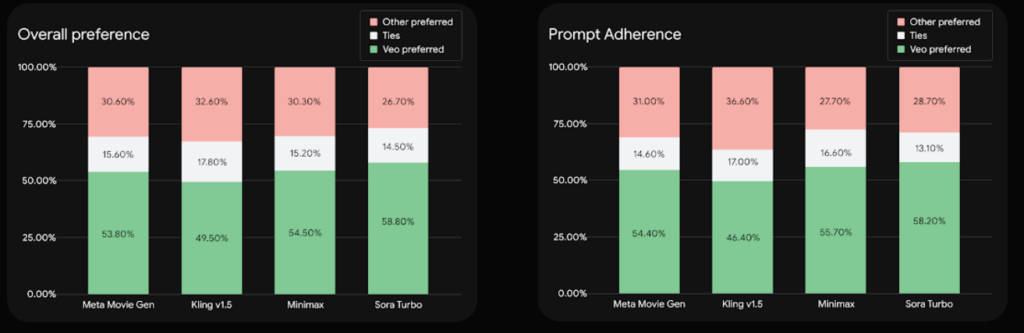

In benchmark tests using the MovieGenBench dataset, Veo 2 consistently outperformed other leading models, including OpenAI’s Sora. When participants viewed videos generated from 1,003 prompts, Veo 2 was preferred over Sora Turbo 58.8% of the time, with only 26.7% preferring Sora Turbo. Additionally, Veo 2 scored higher than Sora Turbo in adherence to prompts, demonstrating its ability to accurately translate user instructions into visual output. These results highlight Veo 2’s strong performance and its potential to become a leading tool in AI video generation.

Veo 2 allows anyone to create high-quality videos, democratizing video creation and giving anyone with an idea the power to produce stunning visuals. As Google continues to develop this technology, it promises to transform video production, opening up creative possibilities for everyone.